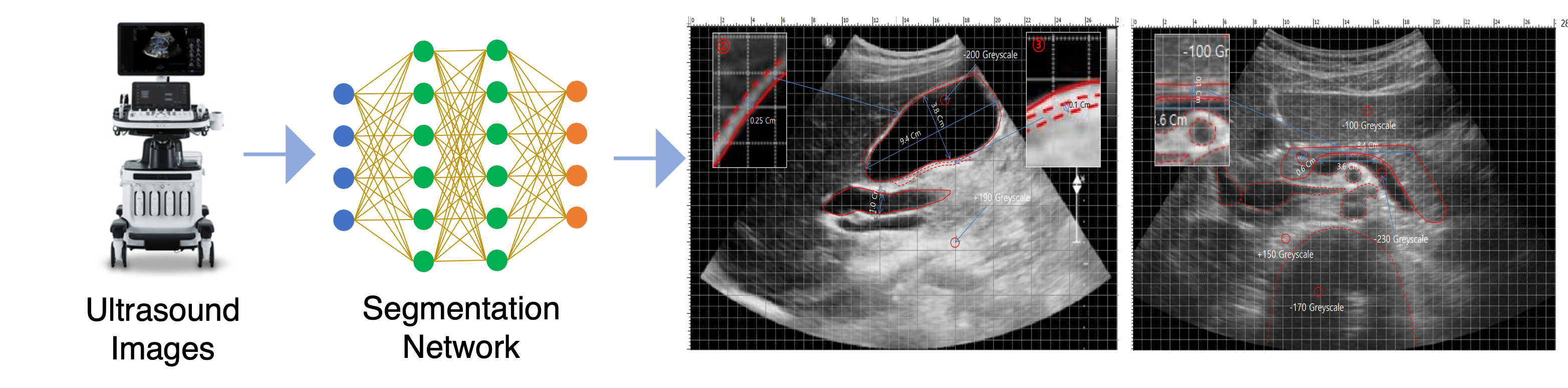

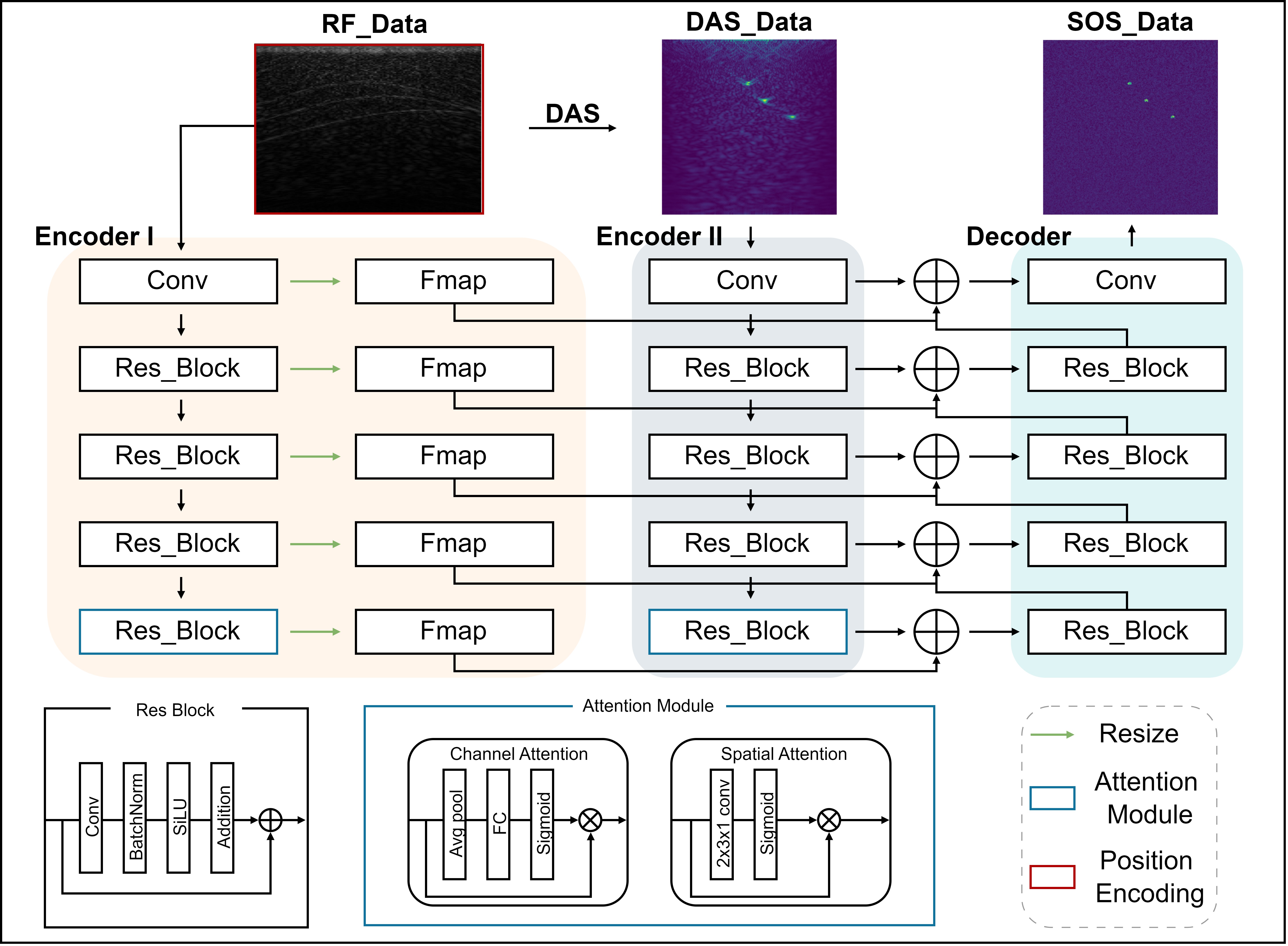

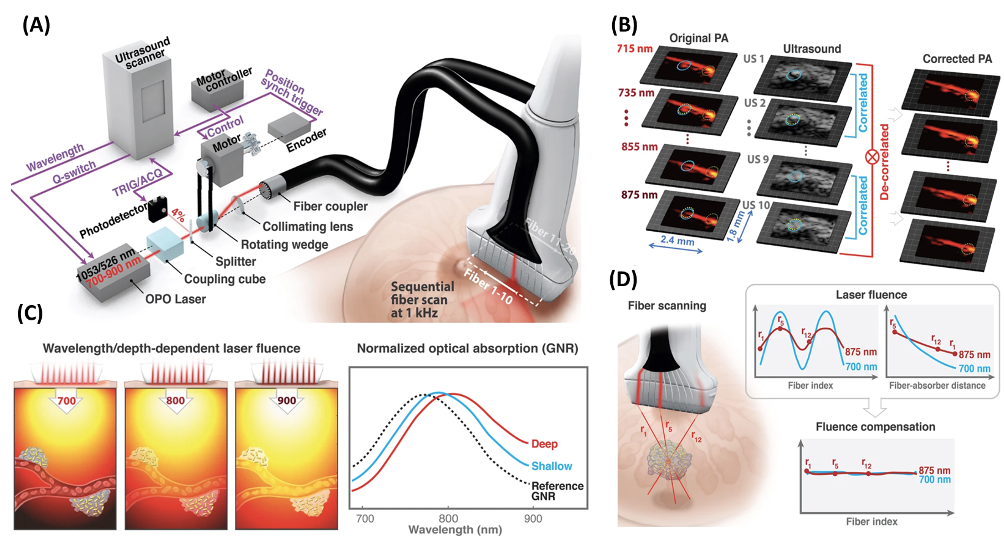

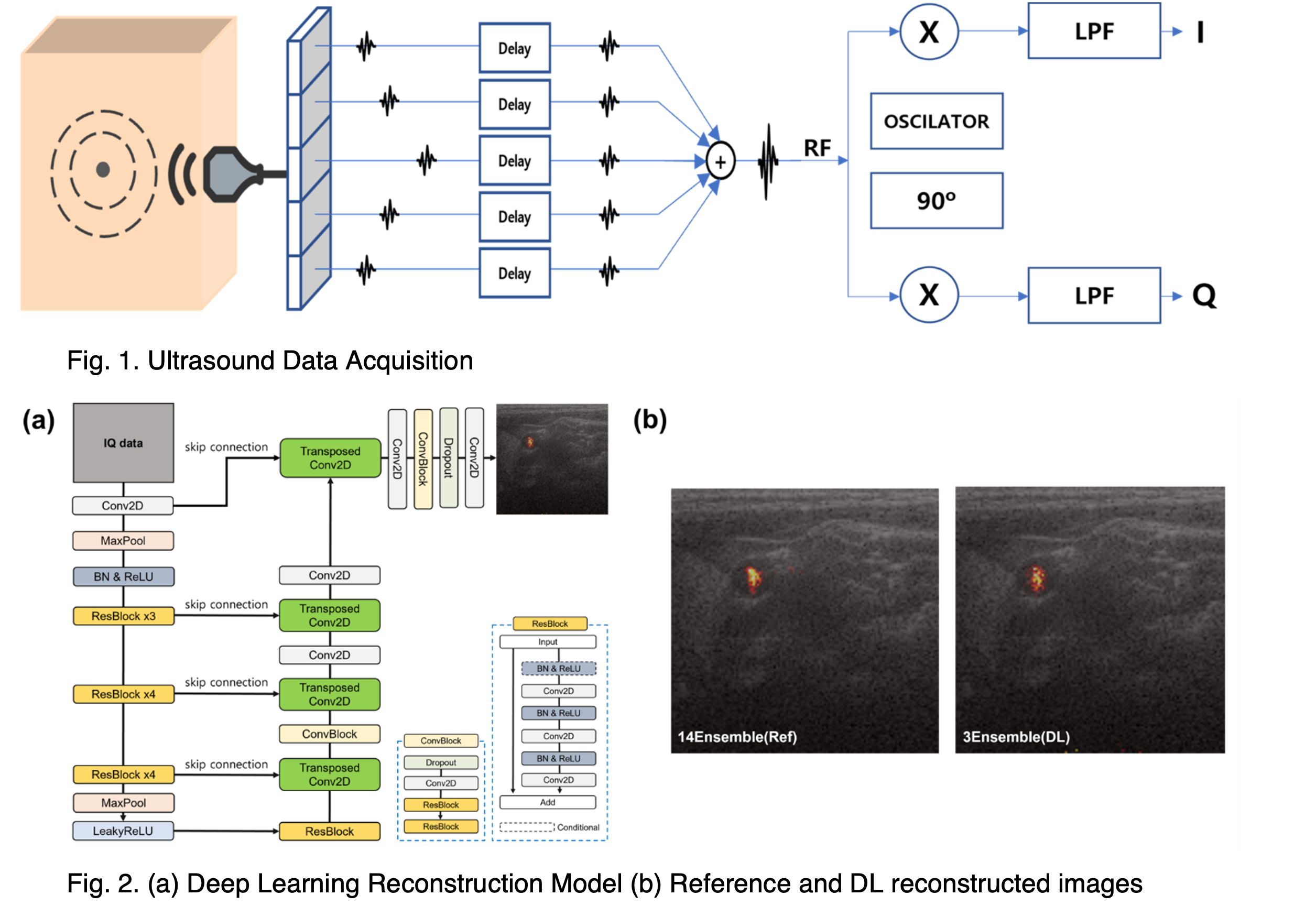

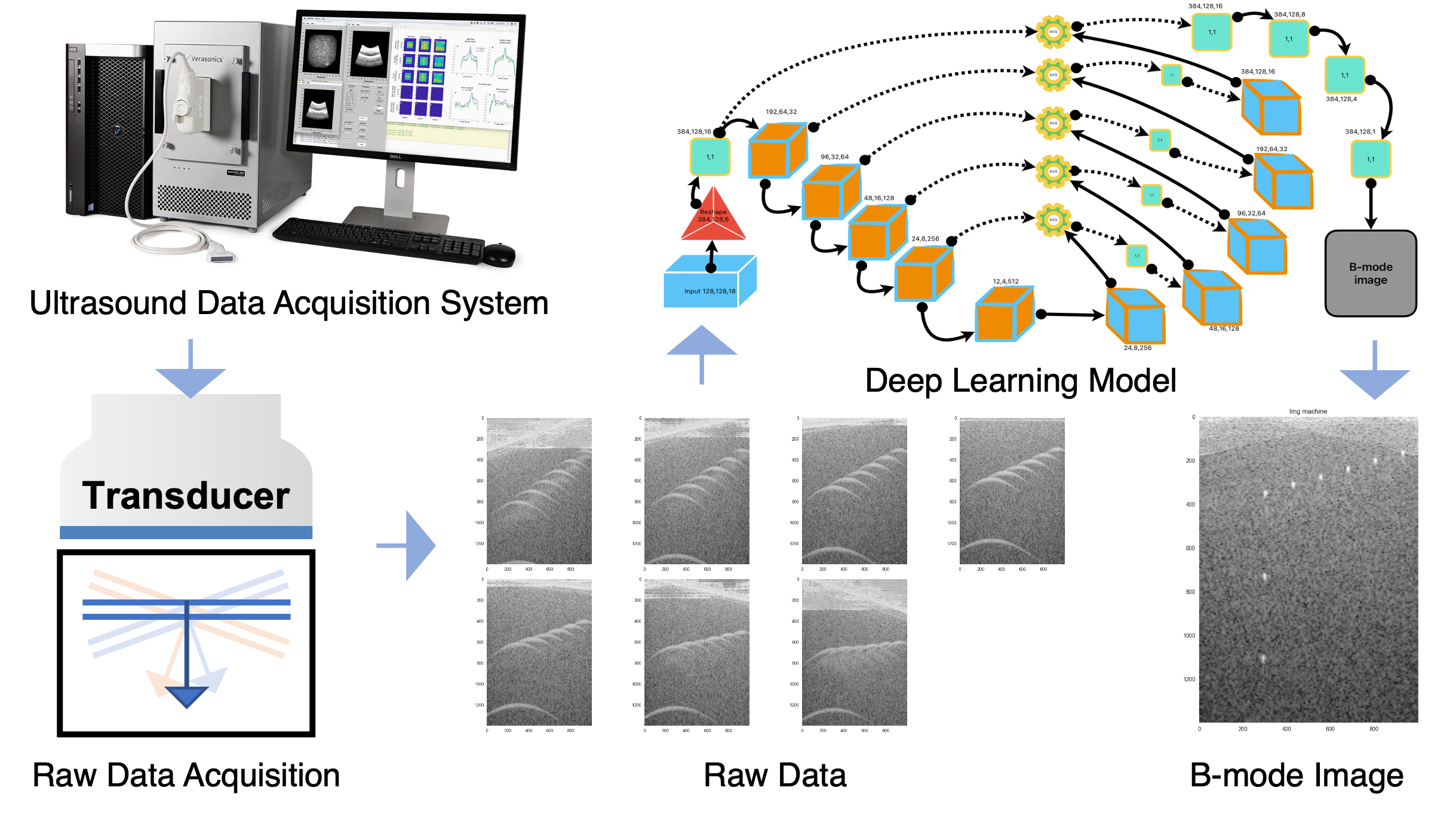

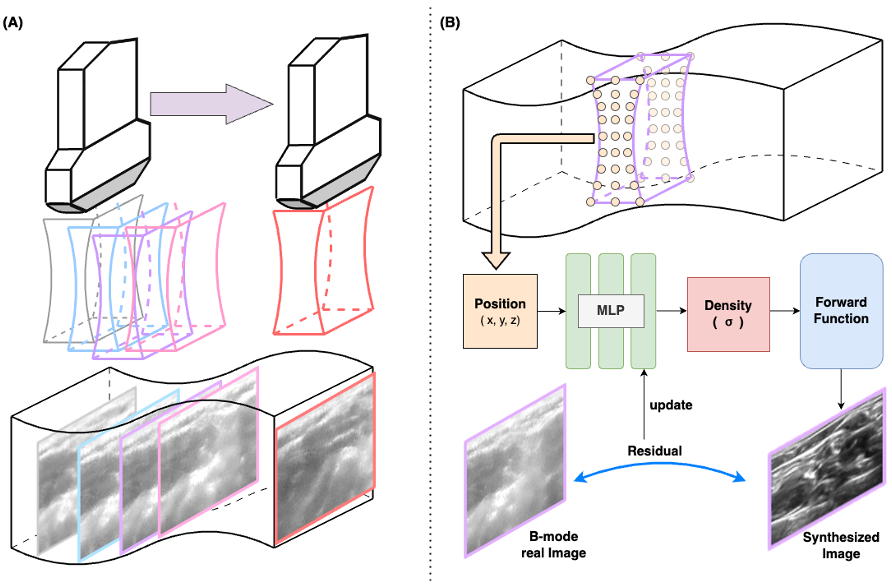

Conventional ultrasound (US) systems with one-dimensional (1D) array transducers are typically used to capture cross-sectional images of regions of interest (RoIs). Despite their advantages—such as cost-efficiency, safety, and real-time imaging capabilities—US imaging requires skilled sonographers due to the limited two-dimensional (2D) field of view (FoV). To address this limitation, we propose a deep learning (DL)-based scan motion estimation framework composed of a ResNet-based encoder, a correlation operation, and a customized global-local attention module. The estimated relative motions are integrated to reconstruct the absolute scan trajectory. Each 2D US frame is then aligned in 3D space using the reconstructed scan path, thereby generating a 3D US volume. Furthermore, this approach enables the reconstruction and visualization of vascular structures by integrating Power Doppler (PD) or Photoacoustic (PA) ultrasound imaging. This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT)